Authentication and authorization in a microservice architecture: Part 3 - implementing authorization using JWT-based access tokens

application architecture architecting securityContact me for information about consulting and training at your company.

The MEAP for Microservices Patterns 2nd edition is now available

This article is the third in a series of articles about authentication and authorization in a microservice architecture. The complete series is:

-

Overview of authentication and authorization in a microservice architecture

-

Implementing complex authorization using Oso Cloud local authorization

The previous article described how to implement authentication in a microservice architecture using OAuth 2.0 and OpenID Connect (OIDC). This article begins a four-part exploration of implementing authorization in a microservice architecture. These articles focus on the service collaboration and implementation challenges that arise when the data required for authorization decisions is distributed across multiple services. They do not cover the design of an authorization model - that is, defining roles, permissions, and access control policies - that satisfy an organization’s security and business requirements.

In this article, I explore how a service can implement authorization using JSON Web Token (JWT)-based access tokens, which are issued by the IAM Service as part of OAuth 2.0 and OpenID Connect (OIDC) based authentication process described in part 2.

There are four sections:

-

Section 1 - an overview of authorization in a microservice architecture, including the role of backend services in making access control decisions and the data they need to make those decisions.

-

section 2 - describes four strategies that a service can use to obtain authorization-related data from other services.

-

section 3 - discusses how to use JWT-based access tokens to carry authorization data, along with the trade-offs and limitations of this approach.

-

section 4 - explores example authorization scenarios from the RealGuardIO application illustrating when access tokens are sufficient, and when other strategies are required.

Let’s start with a quick overview of authorization in a microservice architecture.

Overview of authorization in a microservice architecture

In a microservice architecture, numerous architectural elements potentially play a role in authorization. This includes network-level infrastructure on the path from the user’s browser to the backend services, as well as the Backend-for-Frontend (BFF), which is the entry point into the application. However, the primary responsibility for authorization rests with the services themselves. Each service is responsible for protecting its own resources and making authorization decisions for the requests it handles.

Authorization requires distributed data

To authorize a request, a service must answer three key questions:

-

Who is the user? Established by the authentication process and represented by the access token, typically a JSON Web Token (JWT).

-

What is the user allowed to do? Defined by roles, permissions, relationships, or other attributes.

-

What data does the service need to make this decision? The required authorization data might be owned by the service itself or by other services in the system.

In a microservice architecture, this authorization data is often distributed across multiple services. This creates service collaboration and implementation challenges that simply do not arise in a monolithic application. To manage these distributed authorization challenges, a microservice architecture relies on a flow of identity and authorization data throughout the system.

Propagating identity and authorization data

The flow begins with authentication, which establishes the user’s identity.

The outcome of the authentication process described in part 2 is a session cookie in the user’s browser that contains a JWT-based access token issued by the IAM Service.

The access token contains information about the user including their identity and roles, and plays a central role in the authorization process.

When the logged-in user performs actions in the UI, the browser-based UI makes HTTP requests to the BFF (Backend for Frontend) that include the session cookie.

The BFF extracts the access token from the session cookie and includes it in the HTTP requests that it makes to backend services.

To understand how an application, such as RealGuardIO authorizes requests, let’s first examine the sequence of events that occurs when the user clicks the Disarm button in the UI. After that, I will describe how a backend service obtains the authorization data it needs to authorize a request.

Authorization flow: from browser to backend service

The following diagram shows the sequence of events that occurs when the user clicks the disarm button and the browser makes an HTTP PUT request to the BFF:

The sequence of events is as follows:

-

The user clicks the disarm button in the UI.

-

The UI sends a request to disarm the security system to the BFF (Backend for Frontend). It includes the session cookie.

-

(Optional) An edge network element verifies that the request contains a valid session cookie.

-

The BFF extracts the access token from the session cookie.

-

(Optional) The BFF validates the access token, and may perform authorization based on its contents.

-

The BFF forwards the request to the backend service, e.g.

Security System Service, including the access token. -

(Optional) An inter-service network element validates the access token and may enforce authorization policies.

-

The backend service verifies that the user is authorized to disarm the security system.

Because the edge network, the BFF and inter-service network elements only have access to the HTTP request rather than application data, they typically have a limited role in the authorization process. In most cases, it is the backend service that must perform the authorization check. That’s because backend services have access to application data, both their own data and, when needed, data owned by other services.

Let’s now look at how a backend service obtains the authorization data it needs to authorize a request.

How a backend service obtains the authorization data

When a backend service, such as the Security System Service, receives a request it must perform an authorization check.

As I described in part 1, an authorization check can be modeled by the isAllowed(user, operation, resource) function, which verifies that a user can perform a specific operation on a given resource.

The isAllowed() function typically uses one or more authorization models:

-

RBAC (Role-Based Access Control) - the roles assigned to the user

-

ReBAC (Relationship-Based Access Control) - the user’s relationship to the resource

-

ABAC (Attribute-Based Access Control) - attributes of the user, resource, and environment

To make an authorization decision using these models, the isAllowed() function needs authorization data.

This includes:

-

Information about the user, such as their identity and roles

-

Application-specific data, such as business entities (also called resources), their attributes, and their relationships.

It’s helpful to categorize authorization data based on where it resides and who owns it.

Three types of authorization data

It’s useful to think of authorization data in a microservice architecture as belonging to three categories:

-

Built-in - the user’s identity and roles that are owned by the

IAM Serviceand are provided by the JWT-based access token -

Local - the service’s own data

-

Remote - data owned by other services

As you will see, implementing authorization checks using built-in and local authorization data is typically straightforward. But authorization checks using remote authorization data are more complicated and introduce service collaboration challenges. Let’s first look at using the built-in and local authorization data.

Using built-in and local authorization data

For some operations, a isAllowed() can make the authorization decision using just the service’s own data and the user identity and roles provided by the access token.

A common example is RBAC.

The service simply needs the user’s identity and roles from the access token to authorize the request.

For instance, later in this article, you will see how onboarding a security system dealer is authorized using RBAC.

If the service owns additional authorization data required for ReBAC or ABAC, then authorization remains straightforward. It makes authorization decisions using a combination of the JWT’s claims and its own data. For example, in section RealGuardIO authorization scenarios, I describe how user profile management and managing an employee’s roles are authorized using ReBAC.

However, not all authorization decisions can rely solely on built-in or local data. For many operations, the isAllowed() function also requires remote authorization data—information that is owned by other services. Accessing this remote data introduces additional complexity and service collaboration challenges. Let’s look at how a backend service can obtain remote authorization data.

Obtaining remote authorization data

In a microservice architecture, a service’s isAllowed() function often needs remote authorization data - information owned by other services.

For example, to authorize a disarm request, the Security System Service needs information from multiple services including the user’s roles in the CustomerOrganization and the CustomerOrganization's `SecuritySystems from the Customer Service.

The challenge is that in a microservice architecture, each service’s data is accessible only through its API.

To preserve loose design-time coupling, which is a defining characteristic of the microservice architecture, the Security System Service cannot directly access the `Customer Service’s database.

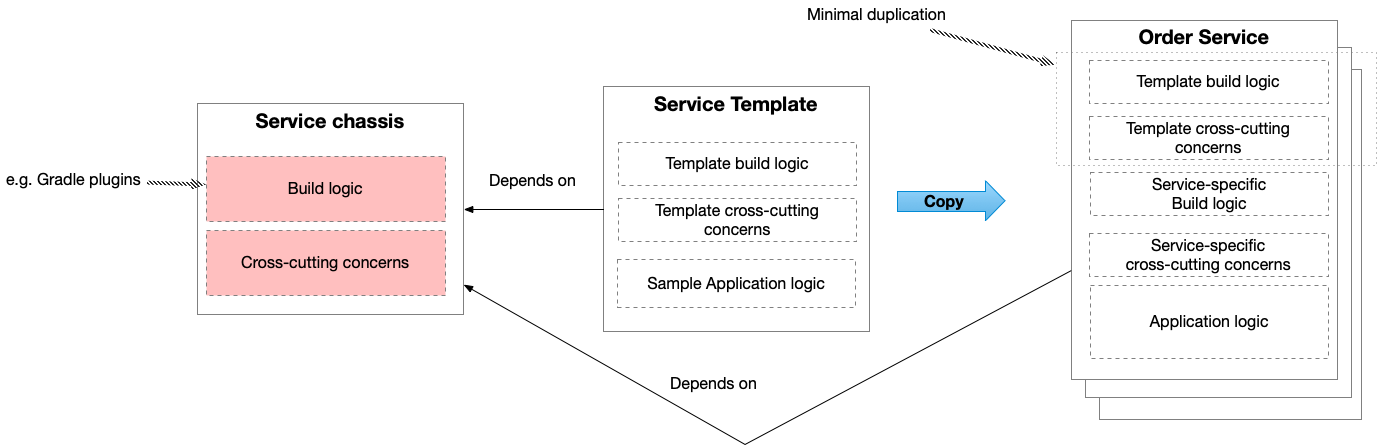

Strategies for obtaining remote authorization data

When a service needs remote authorization data, a critical design decision is how to get it. Picking a strategy has trade-offs in complexity, coupling, performance, and data freshness.

There are three different strategies that a backend service’s isAllowed() function can use to obtain remote data.

In addition, there’s a fourth strategy that uses an entirely different approach to authorization: delegating the authorization decision to a centralized authorization service.

The following diagram summarizes the four strategies:

There are four strategies:

Strategy |

Description |

Provide |

JWT-based access token provides the remote authorization data |

Fetch |

Backend service fetches the remote authorization |

Replicate |

Backend service maintains a replica of the remote authorization data |

Delegate |

Backend service delegates authorization decision-making to an authorization service |

In practice, a backend service’s endpoint will either:

-

Use a combination of the first three strategies to obtain the authorization data it needs.

-

Delegate to an authorization service

Let’s look at each strategy in more detail.

Provide remote authorization data in the access token

One convenient way for a backend service is to obtain the authorization data from other services is to provide it in the access token.

When the IAM Service issues an access token, it can include the authorization data in the JWT’s claims in addition to the user’s identity and roles.

It uses some combination of the fetch or replicate strategies to obtain the authorization data from the services that own it.

This strategy has some important benefits:

-

It simplifies the service

-

It can improve runtime behavior by avoid additional inter-service requests

However, this strategy works best with authorization data is:

-

small - coarse-grained authorization rather than fine-grained

-

stable - unlikely to change frequently

This strategy has several drawbacks, which I describe in more detail below.

It’s also important to remember that the contents of the access token is an application-level design decision.

It might not be possible to satisfy the needs of all services using the provide strategy.

As a result, while convenient in some scenarios, the provide strategy is often not the best choice for handling complex authorization requirements in a microservice architecture.

Fetch remote authorization data dynamically

If the remote authorization data cannot be passed in the access token, then the backend service can fetch the information from the service that owns it.

For example, when handling a disarm() request, the Security System Service can make an HTTP request to the Customer Service to retrieve the user’s organization and their roles in that organization.

Not only does this keep the access token lean, but, as I describe below, it also avoids the risk of stale information in the access token.

However, since this is service collaboration, there are several critical issues that you must carefully consider:

-

Simplicity of communication - the interactions between the two services should be simple and easy to understand

-

Efficiency of communication - the interactions between the two services should be efficient

-

Increased runtime coupling - the backend service depends on the other service at runtime, which increases its latency and reduces its availability

-

Increased design-time coupling - the backend service uses the other service’s API, and so there’s a risk that both services need to change in lockstep

As a result, while the fetch strategy can work well in some scenarios, it is not always the best solution.

For example, in cases where runtime coupling is unacceptable, you may need to consider the replicate strategy instead.

Replicate remote authorization data from other services

An alternative to a service fetching the information each time, is for it to use the CQRS pattern and maintain a local replica of the data within the service’s database.

For example, the Security System Service can maintain a replica of a customer’s employees, roles and security systems by subscribing to domain events published by the Customer Service whenever its data changes.

This strategy has the benefits of keeping the access token lean without the inherent runtime coupling of the fetch strategy.

Yet at the same, the replica strategy is also a form of service collaboration and so has its own drawbacks and limitations:

-

Increased complexity and storage requirements - the backend service needs to maintain the replica

-

Increased risk of inconsistent data - the replica may lag behind the source data, leading to authorization decisions based on stale information.

-

Increased design-time coupling - the backend service uses the other service’s event publishing API, and so there’s a risk that both services need to change in lockstep

Delegate to an authorization service

The fourth strategy is for a backend service to delegate the authorization decision to an authorization service, such as Oso or AWS Verified Permissions.

Instead of the backend service implementing isAllowed(), it simply calls the authorization service to make the decision.

The authorization service responds with either PERMIT or DENY.

A key benefit of this approach is that it simplifies the backend service. It’s no longer responsible for implementing the authorization logic, including obtaining the necessary authorization data. This strategy can significantly reduce application complexity, especially when multiple backend services have complex authorization requirements. Moreover, a centralized authorization service can provide a consistent and correct authorization model across the application, making it easier to manage and maintain.

However, this approach has a few potential drawbacks:

-

increased runtime coupling - The backend service is dependent on the availability and performance of the authorization service.

-

coupling through data dependencies - The authorization service typically needs authorization data from the backend services, which must be obtained using either the

provide,fetchorreplicatestrategies. This creates either design-time or runtime coupling between the authorization service and the backend services.

The delegation strategy will be described in detail in parts 5 and 6 of this series.

No silver bullets

As you can see, none of the four strategies is a silver bullet, as is quite often the case in a microservice architecture. To help choose the right approach for a given scenario, the table below compares the four strategies across key dimensions such as simplicity, coupling, and data freshness.

| Strategy | Benefits | Drawbacks | Best used when |

|---|---|---|---|

Provide |

|

|

|

Fetch |

|

|

|

Replicate |

|

|

|

Delegate |

|

|

|

Choosing the right approach depends on your specific authorization requirements and architecture.

In some cases, the authorization rules generate what are known as dark matter forces that resist decomposition and force you to refactor your architecture to simplify their implementation.

The fetch and replicate strategies will be discussed in more detail in the next article in this series.

Let’s now take a closer look at the provide strategy.

Using JWT-based access tokens for authorization

From the perspective of a service developer, the provide strategy - passing remote authorization data in the access token - can seem like an ideal solution.

Their service’s isAllowed() function has everything it needs to make the authorization decision.

Sometimes, this approach works quite well and simplifies service logic.

However, access tokens have significant limitations that make them unsuitable for many authorization scenarios.

In this section, I explore the design issues that you need to consider when using access tokens for authorization. I will first describe how to represent authorization data as JWT claims. After that, I will discuss the various drawbacks of using access tokens for authorization. But first, let’s start by with a quick overview of JWTs.

Overview of JSON Web Tokens (JWTs)

As described in part 2, a JSON Web Token (JWT) is a signed JSON document that conveys information about the user and the token itself. Specifically, a JWT consists of three parts:

-

Header - Metadata about the token, such as the algorithm used to sign it

-

Payload - a JSON document consisting of a set of claims, which are key-value pairs containing information about the user and the token itself

-

Signature - a cryptographic signature of the header and payload, used to verify the token’s authenticity

Before using a JWT to authorize a request, a recipient, such as a backend service, must validate it by checking:

-

The

iss(issuer) claim the expectedIAM Service -

The

exp(expiration) claim is in the future -

The signature is valid, by recomputing it using the same algorithm and key and comparing it to the token’s signature

Once the JWT is validated, the recipient can use the claims in the JWT to make the authorization decision.

Representing authorization data in JWT claims

Authorization data in a JWT can be represented by two types of claims:

-

standard claims - defined by the JWT, OAuth2 and OpenID Connect specifications

-

custom claims - application-defined claims that contain additional authorization data

Let’s look at each in more detail.

Standard sub and scopes claims

The two standard claims that are relevant for authorization are:

-

sub- Represents the user. However, some authorization servers set this claim to the user’s username, such as their email address, which is mutable. For that reason, some applications, use an additional custom claim, such asuserID, to store the user’s unique identifier. -

scopes- a space separated list of scope names representing a set of permissions, which a resource server, such as a backend service, can use to make authorization decisions.

OAuth2 doesn’t define any specific scope names, although OIDC defines a set of standard scopes defines some, such as openid (used to request OIDC authentication), along with others profile, and email.

Applications often define their own scopes to represent the allowed permissions.

A scope can be a verb, such as read or write, or a noun, representing the type of resource, such as profile or securitySystem.

It might also be a combination of both, such as profile:read or securitySystem:disarm.

Scopes can also be roles.

Scopes are often used in third party authorization scenarios, but they are often insufficient for applications, such as RealGuardIO, that have complex authorization requirements.

Custom claims

Custom claims allow an application to include any structured data needed for authorization. These claims are not defined by any standard and may contain strings, arrays, or even nested objects. Common examples include:

-

userID- the user’s unique identifier. -

roles- an alternative to thescopesclaim, which specifies the user’s application-level roles. -

organizationD- the customer or tenant that the user belongs to

In theory, a JWT-based access token can include any data services need to make authorization decisions. But in practice, there are some significant limitations - coupling, size, staleness, and security risks - which we will examine next.

Design issues when using JWT-based access tokens for authorization

While JWT-based access tokens can potentially simplify service design, there are significant design and optional trade-offs. Before relying on JWT-based access tokens for authorization, it’s important to consider the following issues:

-

Risk of coupling of the

IAM Serviceto backend services -

Access tokens are potentially stale

-

Limits on the size of JWT-based access tokens

-

Risk of hackers exploiting the JWT-based access token’s claims

Let’s look at these issues in more detail.

Risk of coupling of the IAM Service to backend services

To populate a JWT with authorization data, the IAM Service must obtain that data from the services that own it using either the fetch or replicate strategies described earlier.

This can lead to design-time coupling between the IAM Service and the backend services since its dependent on their APIs.

Increased design-time coupling results in more frequent changes to the IAM Service, which potentially impacts its stability.

What’s more, the fetch strategy can also lead to runtime coupling, which can impact the `IAM Service’s availability and performance.

Consequently, you should carefully consider the implications of this design decision.

The following diagram shows how the fetch and replicate impact the IAM Service:

While the IAM Service is the natural source of user identity and application-level roles, it may not own relationship-based or domain-specific authorization data (e.g., a user’s role in a customer organization).

Embedding such data in the token shifts complexity away from the backend services — but increases the complexity and fragility of the IAM Service, a critical security component.

Access tokens are potentially stale

A JWT-based access token is a point-in-time snapshot of the user’s authorization data at the time it was issued. If the authorization data changes, the access token will not reflect those changes until it is refreshed. This can create risks such as:

-

Users retaining permissions they should no longer have

-

Users not having permissions they should have

Sometimes, requiring the user to log in again to obtain a new access token with additional permissions is acceptable. And, it might be possible to handle permission revocation by forcing a logout. Whether this is acceptable depends on how frequently the data changes and how quickly the tokens are refreshed. For dynamic or sensitive permissions, this staleness can be unacceptable.

Access token size limits

Access tokens are typically transmitted via HTTP headers or stored in cookies, both of which have practical size limits. Adding large amounts of authorization data can lead to:

-

Large tokens that exceed browser or infrastructure limits

-

Increased network latency and processing overhead

-

Higher signature computation costs

In a microservice architecture, these factors can impact both performance and reliability.

Exposure of sensitive data

By default, a JWT is signed document but not encrypted. While the RealGuardIO application mitigates this risk by using storing access tokens in encrypted session cookies, other applications might not. If an unencrypted JWT is accessible, then an attacker can read its contents - including any sensitive information in the claims, such as resource IDs or, even roles. For this reason, it’s best to avoid placing sensitive or detailed resource-level data in JWT claims unless it is encrypted or absolutely necessary.

Now that we’ve explored the benefits and limitations of using JWT-based access tokens for authorization, let’s see how these trade-offs play out in practice. The following examples from the RealGuardIO application illustrate when access token-based authorization works well - and when it does not.

RealGuardIO authorization scenarios

The RealGuardIO application provides a useful case study for exploring different approaches to authorization in a microservice architecture. This section walks through four representative operations — some straightforward, others more complex — to illustrate when access token-based authorization is effective and when other strategies are required.

We’ll look at:

-

onboardSecuritySystemDealer()- onboarding a new dealer -

updateProfile()- updating a customer employee’s profile -

updateRoles()- updating a customer employee’s roles in theirCustomerorganization -

disarmSecuritySystem()- disarming a security system

Each of these operations has a corresponding REST endpoint in one of the backend services. The following diagram shows the architecture of the RealGuardIO application and the services that implement these operations:

As you will see, the authorization requirements for these operations vary significantly.

For the first three operations, the combination of the service’s own data and the builtin authorization data from the JWT are sufficient to make the authorization decision.

But for the last operation, the disarmSecuritySystem(), the authorization decision requires remote authorization data from multiple services and might not be a good candidate for using JWT-based authorization.

Let’s look at the first scenario.

Scenario: Onboard a Security System Dealer

To allow a Security System Dealer to use the RealGuardIO application, they must first be onboarded.

This can only be done by a RealGuardIO employee with administrative privileges for dealer management.

These users are modeled with an application-level role: REALGUARDIO_DEALER_ADMINISTRATOR.

The onboarding logic is implemented by the Security System Dealer Service, which has an POST /dealers endpoint.

Before onboarding a dealer, the endpoint must verify that the user is authorized to do so.

That’s not something that the Security System Dealer Service knows.

But fortunately, it can simply verify that the access token’s roles claim contains the REALGUARDIO_DEALER_ADMINISTRATOR role.

If a user’s roles fit within the limits of the access token, this approach is a simple and effective example of RBAC using built-in authorization data from the access token. There is no need for additional service calls or data lookups, and the service logic remains clean and self-contained. The table below summarizes the characteristics of this scenario:

|

None |

Freshness of claims |

Stable - the user’s roles are unlikely to change |

Access token size |

Small - the access token only contains the user’s roles |

Security risk |

Low - the access token does not contain any sensitive information |

Let’s now look at the next scenario.

Scenario: Manage profiles

A customer employee has a personal profile that includes contact information, such as the phone number used for security notifications.

Each employee can view and update their own profile, but not those of other users.

Customer employees are modeled in the application by an application-level role, CUSTOMER_EMPLOYEE.

They also have roles in their Customer organization, which I describe in more detail below.

The Customer Service manages profiles and defines two endpoints, GET /profiles, which returns user’s profile, and PUT /profiles, which updates the profile.

To authorize these requests, the service must verify that:

-

The user has a

CUSTOMER_EMPLOYEEapplication-level role -

The user is the owner of the profile

This is a straightforward case of an authorization check that uses local and built-in authorization data.

The service verifies that the JWT’s roles claim includes CUSTOMER_EMPLOYEE.

It also uses the sub (or userID) claim to access the profile in the service’s database.

This operation’s characteristics are identical to the previous scenario.

Scenario: Grant a Customer Employee permission to arm/disarm a security system

In RealGuardIO, a CustomerEmployee can be assigned one or more roles within their organization.

These roles determine what actions they are allowed to perform — such as arming or disarming security systems.

Role assignments are managed by users with administrative responsibility within the same customer organization.

Since these users are customer employees, these users also have an application-level role of CUSTOMER_EMPLOYEE.

But they also have a CUSTOMER_EMPLOYEE_ADMINISTRATOR role in their Customer organization.

The Customer Service is responsible for managing customer employees and their roles.

It has a PUT /customers/{customerID}/employees/{employeeID}/roles endpoint that updates an employee’s.

The authorization check for this endpoint must verify the following:

-

The user belongs to the organization identified by

customerID -

The user has the

CUSTOMER_EMPLOYEE_ADMINISTRATORrole in that organization -

The target employee (

employeeID) belongs to the same organization

Implementing this authorization check is quite straightforward because the Customer Service owns both the roles that are being updated and the required authorization data.

The Customer Service simply needs the access token’s sub claim.

It can then retrieve the two CustomerEmployees, the Customer that the user belongs to, and update their CustomerEmployeeRoles.

The following diagram shows how this works:

This is a good example of ReBAC (relationship-based access control) where all the relationships are locally owned. No remote authorization data is needed, and the access token remains small. Let’s now look at a more problematic scenario.

Scenario: Disarm security system

The primary purpose of the RealGuardIO application is to enable authorized users - customers, dealers and monitoring providers - to manage security systems. This includes operations such as arming and disarming security systems. In this section, I focus on authorizing the disarm operation, but authorization checks for other management operations follow a similar pattern. You’ll see that authorization checks for the disarm operation are quite complex and authorization data from multiple services. As a result, the disarm operation is probably not a good candidate for using access token-based authorization.

A user can disarm a security system if any of the following are true:

-

The user is assigned the

SECURITY_SYSTEM_DISARMERrole in one of the following:-

The security system’s location

-

The security system’s location’s company

-

The security system’s location’s dealer

-

The security system’s location’s dealer’s monitoring provider

-

-

The user belongs to a team that is assigned the

SECURITY_SYSTEM_DISARMERrole in the security system’s location

Furthermore, if the user is a time restricted user, then the current time must be an allowed time.

In the RealGuardIO application, the Security System Service is responsible security systems and implements a PUT /securitysystems/{securitySystemId} endpoint for arming and disarming a security system.

To understand the challenges with implementing the authorization check for this endpoint, let’s first look at a simplified set of authorization requirements.

After that, I’ll describe the full version of the authorization check.

Scenario: Disarm security system - simplified version

Let’s start with a greatly simplified authorization rule:

A user can disarm a security system if they have the

SECURITY_SYSTEM_DISARMERrole in theCustomerorganization that owns theSecurity systemlocation

This rule ignores security system dealers, monitoring providers, and customer teams.

To enforce this rule, the Security System Service must verify that:

-

The user has the

SECURITY_SYSTEM_DISARMERrole in the relevantCustomer -

The

Security Systemis at aLocationthat is owned by theCustomer

In other words, the authorization check must traverse a chain of relationships between the user and the security system: CustomerEmployee → CustomerEmployeeRole → Customer → Location → SecuritySystem.

However, as the following diagram shows the Security System Service just knows about SecuritySystems:

All other entities - CustomerEmployee, CustomerEmployeeRole, Customer and Location entities and relationships between - are owned by the Customer Service.

The required information needs to somehow flow from the Customer Service to the Security System Service.

Let’s imagine passing the required information in the access token using the following claims:

-

customerID- the ID of the user’sCustomerorganization -

customerRoles- the user’s roles in theCustomer, e.g.["Disarmer"] -

securitySystems- a list ofsecuritySystemIDs

The Security System Service could then easily verify that the user has the SECURITY_SYSTEM_DISARMER role in the Customer organization that owns the security system’s location.

On the surface, this seems like a remarkably simple solution. But there are several significant issues with this approach as the following table shows:

Issue |

Analysis |

|

High - it must obtain the user’s

|

Freshness of claims |

Less stable - a user’s role in an organization can change frequently |

access token size |

Potentially large since an organization can have many security systems. |

Security risk |

Low |

The first problem is that Customer-Location relationship is a one-to-many relationship.

If a customer owns a large number of locations, then there might be too many security system IDs to store in the access token.

One way to avoid passing those IDs in the access token is for the Security System Service to store the customerID in each SecuritySystem.

The customerID would be a parameter of the createSecuritySystem() operation.

While customer ownership is not a core responsibility of the Security System Service, this design change might be a worthwhile trade-off to shrink the access token.

The second problem with this approach is that it requires the IAM Service to know the user’s Customer and their roles within it.

It must obtain this information from the Customer Service using either the fetch strategy or replicate strategy.

As I described earlier in section Obtaining remote authorization data, coupling the IAM Service to backend services is not something to be undertaken lightly.

The third problem with this approach is that, unlike the CustomerEmployee-Customer relationship, an employee’s roles in a Customer are not stable.

When they change, the access token will not reflect those changes until it is reissued.

As a result, a customer employee will be able to arm and disarm systems that they should not be able to.

Or conversely, they will not be able to arm and disarm systems that they should be able to.

In addition to resulting a poor user experience, this can also result in security risks.

As you can see, implementing this relatively simple authorization check is not as straightforward as it might seem.

Passing application data in the access token introduces potentially undesirable coupling.

Also, stale authorization data can result in a poor UX and security risks.

Let’s now look at the full version of the disarm() operation’s authorization rules, which are significantly more complex and present even greater challenges for token-based authorization.

Scenario: Disarm security system - full version

Implementing the full version of the authorization check is even more challenging. There are three reasons why:

-

If the user is an employee of a dealer or a monitoring provider then the

Security System Servicewill also need to traverse relationships in theSecurity System Dealer Serviceand theMonitoring Provider Servicein addition to theCustomer ServiceLocation-SecuritySystemrelationships. -

Regardless of the type of user, the

Security System Servicealso needs to traverseEmployee-Team-TeamLocationRole-Locationrelationships. -

If the user is a shift-based employee, then the

Security System Servicemust also verify that the current time falls within the user’s scheduled working hours.

The following diagram illustrates the authorization data required when the user has the SECURITY_SYSTEM_DEALER_EMPLOYEE application-level role:

In theory, the IAM Service could obtain the required information from the Customer Service, Security System Dealer Service, and Monitoring Provider Service and pass it in the access token.

However, this approach runs into two problems.

-

The

Teamconcept means that a user has access to an arbitrary subset of aCustomer’s’ security systems but that their roles can vary by location. Capturing these complex relationships in a JWT would require encoding a large amount of data, likely exceeding practical token size limits. -

For shift-based employees, an access token’s lifetime might not align with the time window during which a shift-based employee user is allowed to disarm the security system. This would further reduce the effectiveness of using access tokens for authorization. A better approach would be to pass the hopefully compact schedule in the access token and have the

Security System Serviceverify that the current time corresponds to the user’s shift.

As you can see there are several drawbacks to passing the authorization data by the Security System Service in an access token.

It couples the IAM Service to many backend services.

The data might be too large to actually fit in an access token.

There’s a very real risk of stale authorization data resulting in a poor user experience and potential security risks.

Given these issues, the provide strategy is ill-suited for these kinds of scenarios.

The fetch and replicate strategies, which will be explored in the next article, are better fit.

Show me the code

The RealGuardIO application (work-in-progress) can be found in the following GitHub repository.

Acknowledgements

Thanks to Meghan Gill, Jacob Prall and YongWook Kim for reviewing this article and providing valuable feedback.

Summary

-

To make an authorization decision, a service needs authorization data: information about the user - their identity and roles - along with application data - the business entities (resource is a business entity), their attributes and relationships.

-

There are three categories of authorization data:

-

built-in - includes the user’s identity and roles that are owned by the

IAM Serviceand provided by JWT-based access token -

local - the service’s own data

-

remote - data owned by other services

-

-

A service can use one or more of the following strategies to obtain remote data:

-

Provide - pass the application data in an access token, which, by default, just contains the user’s identity and application-level roles.

-

Fetch - fetch the data from the other service by, for example, calling its REST API

-

Replicate - maintain a replica of the data using event-driven synchronization, such as the CQRS pattern

-

-

Another strategy is for backend services to delegate the authorization decision to an authorization service, which eliminates the need for the backend services to obtain the authorization data from other services.

-

There are numerous trade-offs to consider when choosing an authorization strategy including design-time coupling, correctness, performance and runtime coupling.

-

Using access tokens to provide authorization data can simplify the service and improve its runtime behavior - particularly when the data is small and stable.

-

Using access tokens to transport authorization data has several limitations and drawbacks:

-

Risk of adding complexity to

IAM Serviceand coupling it to the backend services that provide the authorization data. -

Risk of stale authorization data because access tokens are periodically issued by the

IAM Service. This can result in authorization decisions being made based on outdated information leading to a poor user experience and potential security risks. -

Risk of reducing performance due to the overhead of transporting and processing large access tokens, or worse: exceeding access token size limits.

-

-

Access tokens are a good fit when the authorization data is small and stable

What’s next?

The next article will explore how to implement authorization checks using the fetch and replicate strategies and describe how to apply them effectively in a microservice architecture.

The remaining two articles in this series will describe how to implement the delegate strategy using Oso.

Need help with modernizing your architecture?

I help organizations modernize safely and avoid creating a modern legacy system — a new architecture with the same old problems. If you’re planning or struggling with a modernization effort, I can help.

Learn more about my modernization and architecture advisory work →

Premium content now available for paid subscribers at

Premium content now available for paid subscribers at